Tutorialtokenizationllmtransformers

Tokenization Shapes Model Vocabulary and Understanding

6.8

Relevance Score

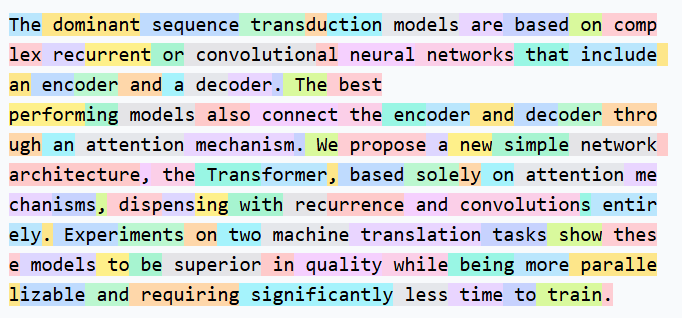

An explainer outlines how tokenization breaks text into subword units before AI models process input, showing examples like 'understanding' → 'understand'+'ing' and 'ChatGPT' → 'Chat'+'G'+'PT'. It notes GPT-3 used roughly 50,000 tokens while GPT-4 used about 100,000 tokens, meaning larger vocabularies let models represent language more precisely for downstream tasks.