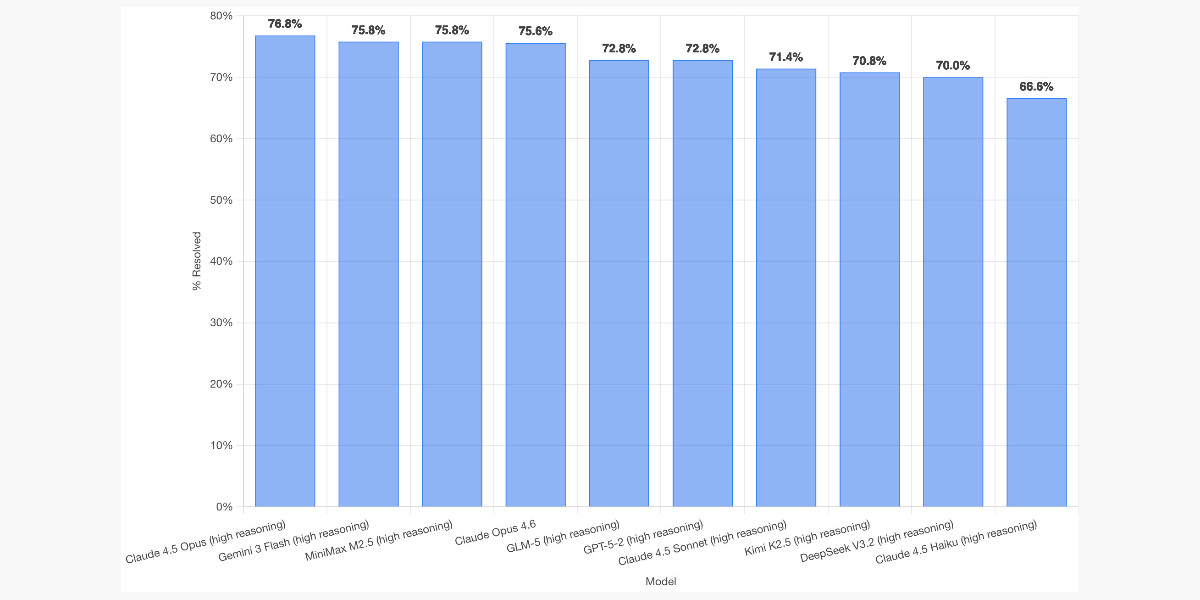

Researchswe benchcode generationllmbenchmarks

SWE-bench Updates Bash-Only Coding Leaderboard With New Model Rankings

8.1

Relevance Score

On 19 February 2026, SWE-bench published a fresh full run of its February 2025 'Bash Only' coding benchmark, evaluating models on 2,294 real-world problems drawn from 12 open-source repositories. Claude Opus 4.5 ranked first, followed by Gemini 3 Flash and MiniMax M2.5; OpenAI's GPT-5.2 placed sixth while GPT-5.3-Codex was absent, and the run used a uniform system prompt for fair comparison.